Classification¶

MXNet Pytorch

MXNet¶

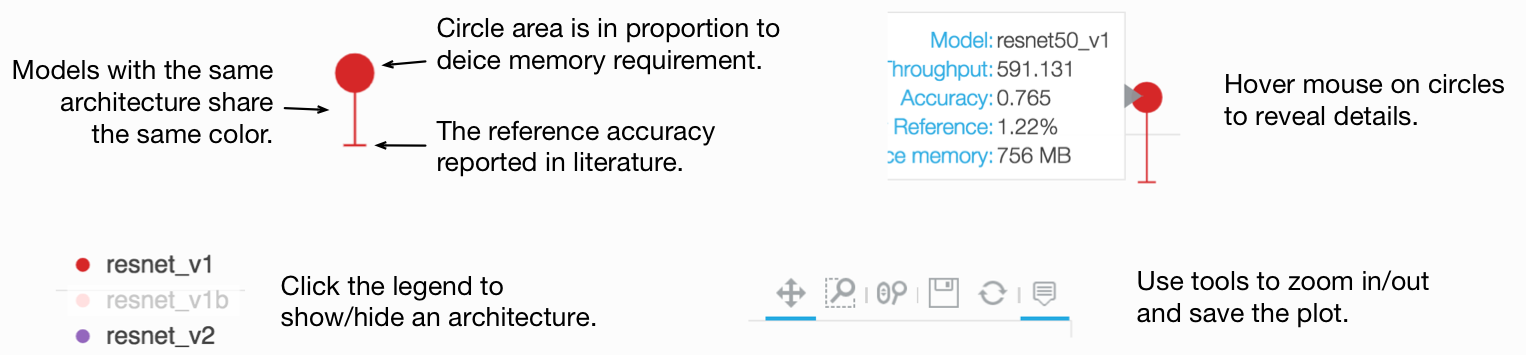

Visualization of Inference Throughputs vs. Validation Accuracy of ImageNet pre-trained models is illustrated in the following graph. Throughputs are measured with single V100 GPU and batch size 64.

How To Use Pretrained Models¶

The following example requires

GluonCV>=0.4andMXNet>=1.4.0. Please follow our installation guide to install or upgrade GluonCV and MXNet if necessary.Prepare an image by yourself or use our sample image. You can save the image into filename

classification-demo.pngin your working directory or change the filename in the source codes if you use an another name.Use a pre-trained model. A model is specified by its name.

Let’s try it out!

import mxnet as mx

import gluoncv

# you can change it to your image filename

filename = 'classification-demo.png'

# you may modify it to switch to another model. The name is case-insensitive

model_name = 'ResNet50_v1d'

# download and load the pre-trained model

net = gluoncv.model_zoo.get_model(model_name, pretrained=True)

# load image

img = mx.image.imread(filename)

# apply default data preprocessing

transformed_img = gluoncv.data.transforms.presets.imagenet.transform_eval(img)

# run forward pass to obtain the predicted score for each class

pred = net(transformed_img)

# map predicted values to probability by softmax

prob = mx.nd.softmax(pred)[0].asnumpy()

# find the 5 class indices with the highest score

ind = mx.nd.topk(pred, k=5)[0].astype('int').asnumpy().tolist()

# print the class name and predicted probability

print('The input picture is classified to be')

for i in range(5):

print('- [%s], with probability %.3f.'%(net.classes[ind[i]], prob[ind[i]]))

The output from our sample image is expected to be

The input picture is classified to be

- [Welsh springer spaniel], with probability 0.899.

- [Irish setter], with probability 0.005.

- [Brittany spaniel], with probability 0.003.

- [cocker spaniel], with probability 0.002.

- [Blenheim spaniel], with probability 0.002.

Remember, you can try different models by replacing the value of model_name.

Read further for model names and their performances in the tables.

ImageNet¶

Hint

Training commands work with this script:

A model can have differently trained parameters with different hashtags. Parameters with a grey name can be downloaded by passing the corresponding hashtag.

Download default pretrained weights:

net = get_model('ResNet50_v1d', pretrained=True)Download weights given a hashtag:

net = get_model('ResNet50_v1d', pretrained='117a384e')

ResNet50_v1_int8 and MobileNet1.0_int8 are quantized model calibrated on ImageNet dataset.

ResNet¶

Hint

ResNet50_v1_int8is a quantized model forResNet50_v1.ResNet_v1bmodifiesResNet_v1by setting stride at the 3x3 layer for a bottleneck block.ResNet_v1cmodifiesResNet_v1bby replacing the 7x7 conv layer with three 3x3 conv layers.ResNet_v1dmodifiesResNet_v1cby adding an avgpool layer 2x2 with stride 2 downsample feature map on the residual path to preserve more information.

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

ResNet18_v1 1 |

70.93 |

89.92 |

a0666292 |

||

ResNet34_v1 1 |

74.37 |

91.87 |

48216ba9 |

||

ResNet50_v1 1 |

77.36 |

93.57 |

cc729d95 |

||

ResNet50_v1_int8 1 |

76.86 |

93.46 |

cc729d95 |

||

ResNet101_v1 1 |

78.34 |

94.01 |

d988c13d |

||

ResNet152_v1 1 |

79.22 |

94.64 |

acfd0970 |

||

ResNet18_v1b 1 |

70.94 |

89.83 |

2d9d980c |

||

ResNet34_v1b 1 |

74.65 |

92.08 |

8e16b848 |

||

ResNet50_v1b 1 |

77.67 |

93.82 |

0ecdba34 |

||

ResNet50_v1b_gn 1 |

77.36 |

93.59 |

0ecdba34 |

||

ResNet101_v1b 1 |

79.20 |

94.61 |

a455932a |

||

ResNet152_v1b 1 |

79.69 |

94.74 |

a5a61ee1 |

||

ResNet50_v1c 1 |

78.03 |

94.09 |

2a4e0708 |

||

ResNet101_v1c 1 |

79.60 |

94.75 |

064858f2 |

||

ResNet152_v1c 1 |

80.01 |

94.96 |

75babab6 |

||

ResNet50_v1d 1 |

79.15 |

94.58 |

117a384e |

||

ResNet50_v1d 1 |

78.48 |

94.20 |

00319ddc |

||

ResNet101_v1d 1 |

80.51 |

95.12 |

1b2b825f |

||

ResNet101_v1d 1 |

79.78 |

94.80 |

8659a9d6 |

||

ResNet152_v1d 1 |

80.61 |

95.34 |

cddbc86f |

||

ResNet152_v1d 1 |

80.26 |

95.00 |

cfe0220d |

||

ResNet18_v2 2 |

71.00 |

89.92 |

a81db45f |

||

ResNet34_v2 2 |

74.40 |

92.08 |

9d6b80bb |

||

ResNet50_v2 2 |

77.11 |

93.43 |

ecdde353 |

||

ResNet101_v2 2 |

78.53 |

94.17 |

18e93e4f |

||

ResNet152_v2 2 |

79.21 |

94.31 |

f2695542 |

ResNext¶

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

ResNext50_32x4d 12 |

79.32 |

94.53 |

4ecf62e2 |

||

ResNext101_32x4d 12 |

80.37 |

95.06 |

8654ca5d |

||

ResNext101_64x4d_v1 12 |

80.69 |

95.17 |

2f0d1c9d |

||

79.95 |

94.93 |

7906e0e1 |

|||

80.91 |

95.39 |

688e2389 |

|||

81.01 |

95.32 |

11c50114 |

ResNeSt¶

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

ResNeSt14 17 |

75.75 |

92.70 |

7e0b0cae |

||

ResNeSt26 17 |

78.68 |

94.38 |

36459074 |

||

ResNeSt50 17 |

81.04 |

95.42 |

bcfefe1d |

||

ResNeSt101 17 |

82.83 |

96.42 |

5da943b3 |

||

ResNeSt200 17 |

83.86 |

96.86 |

0c5d117d |

||

ResNeSt269 17 |

84.53 |

96.98 |

11ae7f5d |

MobileNet¶

Hint

MobileNet1.0_int8is a quantized model forMobileNet1.0.

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

MobileNet1.0 4 |

73.28 |

91.30 |

efbb2ca3 |

||

MobileNet1.0_int8 4 |

72.85 |

90.99 |

efbb2ca3 |

||

MobileNet1.0 4 |

72.93 |

91.14 |

cce75496 |

||

MobileNet0.75 4 |

70.25 |

89.49 |

84c801e2 |

||

MobileNet0.5 4 |

65.20 |

86.34 |

0130d2aa |

||

MobileNet0.25 4 |

52.91 |

76.94 |

f0046a3d |

||

MobileNetV2_1.0 5 |

72.04 |

90.57 |

f9952bcd |

||

MobileNetV2_0.75 5 |

69.36 |

88.50 |

b56e3d1c |

||

MobileNetV2_0.5 5 |

64.43 |

85.31 |

08038185 |

||

MobileNetV2_0.25 5 |

51.76 |

74.89 |

9b1d2cc3 |

||

MobileNetV3_Large 15 |

75.32 |

92.30 |

eaa44578 |

||

MobileNetV3_Small 15 |

67.72 |

87.51 |

33c100a7 |

VGG¶

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

VGG11 9 |

66.62 |

87.34 |

dd221b16 |

||

VGG13 9 |

67.74 |

88.11 |

6bc5de58 |

||

VGG16 9 |

73.23 |

91.31 |

e660d456 |

||

VGG19 9 |

74.11 |

91.35 |

ad2f660d |

||

VGG11_bn 9 |

68.59 |

88.72 |

ee79a809 |

||

VGG13_bn 9 |

68.84 |

88.82 |

7d97a06c |

||

VGG16_bn 9 |

73.10 |

91.76 |

7f01cf05 |

||

VGG19_bn 9 |

74.33 |

91.85 |

f360b758 |

SqueezeNet¶

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

SqueezeNet1.0 10 |

56.11 |

79.09 |

264ba497 |

||

SqueezeNet1.1 10 |

54.96 |

78.17 |

33ba0f93 |

DenseNet¶

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

DenseNet121 7 |

74.97 |

92.25 |

f27dbf2d |

||

DenseNet161 7 |

77.70 |

93.80 |

b6c8a957 |

||

DenseNet169 7 |

76.17 |

93.17 |

2603f878 |

||

DenseNet201 7 |

77.32 |

93.62 |

1cdbc116 |

Pruned ResNet¶

Model |

Top-1 |

Top-5 |

Hashtag |

Speedup (to original ResNet) |

|---|---|---|---|---|

resnet18_v1b_0.89 |

67.2 |

87.45 |

54f7742b |

2x |

resnet50_v1d_0.86 |

78.02 |

93.82 |

a230c33f |

1.68x |

resnet50_v1d_0.48 |

74.66 |

92.34 |

0d3e69bb |

3.3x |

resnet50_v1d_0.37 |

70.71 |

89.74 |

9982ae49 |

5.01x |

resnet50_v1d_0.11 |

63.22 |

84.79 |

6a25eece |

8.78x |

resnet101_v1d_0.76 |

79.46 |

94.69 |

a872796b |

1.8x |

resnet101_v1d_0.73 |

78.89 |

94.48 |

712fccb1 |

2.02x |

Others¶

Hint

InceptionV3 is evaluated with input size of 299x299.

Model |

Top-1 |

Top-5 |

Hashtag |

Training Command |

Training Log |

|---|---|---|---|---|---|

AlexNet 6 |

54.92 |

78.03 |

44335d1f |

||

darknet53 3 |

78.56 |

94.43 |

2189ea49 |

||

darknet53 3 |

78.13 |

93.86 |

95975047 |

||

InceptionV3 8 |

78.77 |

94.39 |

a5050dbc |

||

GoogLeNet 16 |

72.87 |

91.17 |

c7c89366 |

||

Xception 8 |

79.56 |

94.77 |

37c1c90b |

||

InceptionV3 8 |

78.41 |

94.13 |

e132adf2 |

||

SENet_154 14 |

81.26 |

95.51 |

b5538ef1 |

CIFAR10¶

The following table lists pre-trained models trained on CIFAR10.

Hint

Our pre-trained models reproduce results from “Mix-Up” 13 . Please check the reference paper for further information.

Training commands in the table work with the following scripts:

For vanilla training:

Download train_cifar10.pyFor mix-up training:

Download train_mixup_cifar10.py

Model |

Acc (Vanilla/Mix-Up 13 ) |

Training Command |

Training Log |

|---|---|---|---|

CIFAR_ResNet20_v1 1 |

92.1 / 92.9 |

||

CIFAR_ResNet56_v1 1 |

93.6 / 94.2 |

||

CIFAR_ResNet110_v1 1 |

93.0 / 95.2 |

||

CIFAR_ResNet20_v2 2 |

92.1 / 92.7 |

||

CIFAR_ResNet56_v2 2 |

93.7 / 94.6 |

||

CIFAR_ResNet110_v2 2 |

94.3 / 95.5 |

||

CIFAR_WideResNet16_10 11 |

95.1 / 96.7 |

||

CIFAR_WideResNet28_10 11 |

95.6 / 97.2 |

||

CIFAR_WideResNet40_8 11 |

95.9 / 97.3 |

||

CIFAR_ResNeXt29_16x64d 12 |

96.3 / 97.3 |

PyTorch¶

Models implemented using PyTorch will be added later. Please checkout our MXNet implementation instead.

Reference¶

- 1(1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24)

He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Deep residual learning for image recognition.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770-778. 2016.

- 2(1,2,3,4,5,6,7,8)

He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Identity mappings in deep residual networks.” In European Conference on Computer Vision, pp. 630-645. Springer, Cham, 2016.

- 3(1,2)

Redmon, Joseph, and Ali Farhadi. “Yolov3: An incremental improvement.” arXiv preprint arXiv:1804.02767 (2018).

- 4(1,2,3,4,5,6)

Howard, Andrew G., Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. “Mobilenets: Efficient convolutional neural networks for mobile vision applications.” arXiv preprint arXiv:1704.04861 (2017).

- 5(1,2,3,4)

Sandler, Mark, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. “Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation.” arXiv preprint arXiv:1801.04381 (2018).

- 6

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” In Advances in neural information processing systems, pp. 1097-1105. 2012.

- 7(1,2,3,4)

Huang, Gao, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q. Weinberger. “Densely Connected Convolutional Networks.” In CVPR, vol. 1, no. 2, p. 3. 2017.

- 8(1,2,3)

Szegedy, Christian, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. “Rethinking the inception architecture for computer vision.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2818-2826. 2016.

- 9(1,2,3,4,5,6,7,8)

Karen Simonyan, Andrew Zisserman. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” arXiv technical report arXiv:1409.1556 (2014).

- 10(1,2)

Iandola, Forrest N., Song Han, Matthew W. Moskewicz, Khalid Ashraf, William J. Dally, and Kurt Keutzer. “Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size.” arXiv preprint arXiv:1602.07360 (2016).

- 11(1,2,3)

Zagoruyko, Sergey, and Nikos Komodakis. “Wide residual networks.” arXiv preprint arXiv:1605.07146 (2016).

- 12(1,2,3,4,5,6,7)

Xie, Saining, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. “Aggregated residual transformations for deep neural networks.” In Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on, pp. 5987-5995. IEEE, 2017.

- 13(1,2)

Zhang, Hongyi, Moustapha Cisse, Yann N. Dauphin, and David Lopez-Paz. “mixup: Beyond empirical risk minimization.” arXiv preprint arXiv:1710.09412 (2017).

- 14(1,2,3,4)

Hu, Jie, Li Shen, and Gang Sun. “Squeeze-and-excitation networks.” arXiv preprint arXiv:1709.01507 7 (2017).

- 15(1,2)

Howard, Andrew, Mark Sandler, Grace Chu, Liang-Chieh Chen, Bo Chen, Mingxing Tan, Weijun Wang et al. “Searching for mobilenetv3.” arXiv preprint arXiv:1905.02244 (2019).

- 16

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich “Going Deeper with Convolutions” arXiv preprint arXiv:1409.4842 (2014).

- 17(1,2,3,4,5,6)

Hang Zhang, Chongruo Wu, Zhongyue Zhang, Yi Zhu, Zhi Zhang, Haibin Lin, Yue Sun, Tong He, Jonas Muller, R. Manmatha, Mu Li and Alex Smola “ResNeSt: Split-Attention Network” arXiv preprint (2020).